Fighting back from Drupal hacks

The last thing any website owner, developer or administrator wants to hear is that they've been hacked. Whether the cause was the fault of insecure passwords, problematic file permissions, a vulnerability in the underlying code or the myriad other potential issues, it's an undesirable situation to be in.

When Drupal 7.32 was released and SA-CORE-2014-005 (CVE-2014-3704) was first made public, site operators had as little as 7 hours to upgrade before the hack attempts started.

With such a short window to upgrade, if websites weren't hosted with managed providers who could step in and provide mitigation and support, options to tackle the vulnerability were drastically limited. These delays were attributable to:

- Large numbers of sites to upgrade with resources available and capable of performing patching stretched thin;

- Low internal skill level requiring professional consultation to assist patching and deployment;

- Developers who do not follow the community or security advisories and were therefore unaware of SA-CORE-2014-005 or its severity;

- Slow moving or heavily bureaucratic organisations hampering agile development; or

- Those who just want to watch the world burn.

After the fact, resources were released to assist website owners who were not quick enough to upgrade and were concerned, rightly, that they may have fallen victim to a successful hacking attempt. User guides, flow charts, and code solutions were created with the aim to provide assistance to those in a difficult situation. The underlying message across all of these resources was clear:

If the site was not upgraded within 7 hours, assume it was hacked and rebuild.

Recently I was provided with the opportunity to work on non-Acquia hosted sites which had not been upgraded to Drupal 7.32 until 10 days after the release. I'm hoping that by documenting the steps that I and my colleagues took in response, it will serve as a guide for others in similar positions. Despite the caveat that there is always the possibility that a hack or exploit was undetected, our aim was to verify the state of these sites, purge any hacks/exploits we found and put the sites on new infrastructure.

Battle Plan

Every Drupal site comprises of least code, a database, and files. Each of these requires slightly different tactics when checking for potential exploits. Below, I've documented each of the steps taken against the various site components. Attached is a working document we created drawing on advice provided by the Drupal community, our own internal best practices, and tests at Acquia. As we progressed through the steps, each could be checked off as a way to measure our progress. I'd like to emphasize that keeping an offline forensic copy of a potentially hacked site is important and should be the first step in this process.

Working in conjunction with two other colleagues, we each took one of the code, database and files and rotated through until each had been looked at by three sets of eyes. Following all of these steps, each component was migrated onto new hardware where it happily resides today.

Database

Attempting to audit a database without a recent database backup to roll back to will be painful and exhausting. Without a recent backup to use, our example site needed to have its database checked by hand. The difficulty of this process was reduced by the following lucky facts:

- A database backup was available to compare against (albeit not a recent one);

- The database wasn't massive (~20 users & ~2000 nodes/associated field content);

- Content was not added at high rates making the database relatively slow moving.

While some areas of the database would need to be checked, there were a lot that could be ruled out with just a few command line snippets.

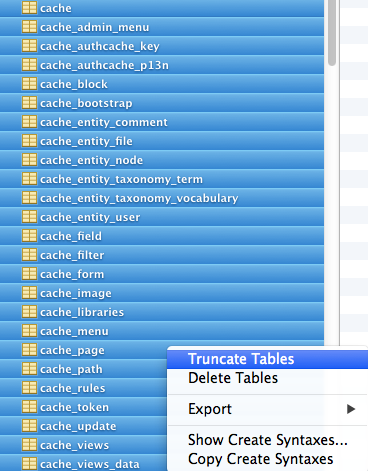

Truncate all transient tables

Any table that stored non-essential data could be purged to ensure nothing persisted past the audit. These included, but were not limited to, cache tables, logging tables, session stores and even search indices. Anything that could be rebuilt and did not contain canonical data was fair game.

In our case, using a database editor such as Sequel Pro made this trival, as it was simply a case of selecting tables and truncating them in the UI. However, if you have no UI, or just want to play on the command line then the following may be used. Additional tables may be added to the TRUNCATE array if necessary:

$ MYSQL_USER='username' \

MYSQL_PW='password' \

MYSQL_DB='db_name' \

TRUNCATE=(`mysql -u$MYSQL_USER -p$MYSQL_PW $MYSQL_DB -s -N -e "SHOW TABLES LIKE 'cache_%';"`) && \

TRUNCATE+=('sessions' 'watchdog' 'search_dataset' 'search_index' 'flood') && \

for table in ${TRUNCATE[*]}; do \

echo "Truncating $table"; `mysql -u$MYSQL_USER -p$MYSQL_PW $MYSQL_DB -s -N -e "TRUNCATE $table";`; \

doneChecksum the remaining tables against any backup

Once the tables had been truncated, each table in the database was checked for changes against the known backup. I scoured the internet for something that shows table diffs although could not locate anything conclusive. Diffing table dumps would have been eye-bleedingly-fun, so instead I opted to turn to the trusty percona-toolkit.

Percona-toolkit is easy enough to install with either brew, yum or another package manager, and it comes with a tool called pt-table-checksum. Whilst it is meant to be used for ensuring consistency across multi-master and master-slave database setups, it can also be used to compare two databases by using the following command:

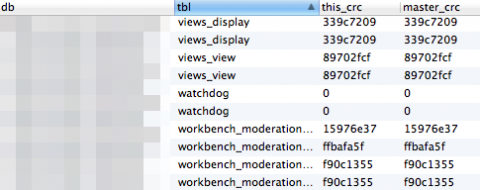

pt-table-checksum --ask-pass -uroot --databases=my_db_backup,my_db_live --nocheck-planThis command dumps a bunch of data in the checksums table of the percona database. This data can then be examined to determine whether any tables are different between the backup and the live database. In the example below, the watchdog table is empty, the first workbench_moderation table differs, but all other tables are the same.

Dealing with the remaining tables

It was likely the remaining tables would include the node, field and user tables. Any content change or user log in would, of course, cause the checksums of the tables to differ. At this point, the most thorough way of ensuring database sanitization was to import all tables from the backup not cleared by the above steps and then add in content manually, which was the approach we took.

However, as this can potentially take a lot of time, an acceptable alternative considered was to:

- Manually check recently added rows (new nodes / new users)

- Run queries to determine where unsafe filter formats are being used and examine them (especially important where PHP filter is enabled)

- Grep a database dump against common potential exploit code (base64, $_POST, eval etc)

The decision to start again from backup or attempt to clean an existing table is really one of effort versus risk, so it is one that should be made after careful consideration.

A useful snippet to use for detecting php filter fields in the database is the following. Again, this is not something to rely on 100%, but it provides an excellent method of finding the highest risk areas where exploits may be.

<?php

$formats = array('php_code');

$fields = db_query("SELECT field_name FROM {field_config} WHERE module = 'text'")->fetchCol();

foreach ($fields as $field) {

$format_field = $field . '_format'; $field_table = 'field_data_' . $field;

$result = db_query("SELECT entity_type, entity_id FROM {" . ${field_table} . "} WHERE $format_field IN (:formats) GROUP BY entity_id, entity_type", array(':formats' => $formats));

if ($result->rowCount()) {

$entity_ids = array();

foreach ($result as $entity) {

$output = sprintf('Entity type %s with ID %d contains the field %s with PHP format', $entity->entity_type, $entity->entity_id, $field); echo $output . PHP_EOL;

}

}

}Code

Because a recent copy of the code wasn't available and the code wasn't versioned, the following steps were followed.

We downloaded a copy of the Drupal core being used by the site at the time of the security risk. This was then be diffed for changes to Drupal core. Any additional patches were matched to the differences in the codebase during this step. It was important to check the potentially insecure codebase against a freshly downloaded copy of the appropriate version of Drupal to minimise the changes requiring investigation.

After that, contributed modules were diffed against the relevant version of the module to ensure no changes had been made. While the Hacked! module provided some ability to easily check modules for additions or deletions, there was no provision for checking if new files had been added.

Custom modules and features were the final, and possibly most difficult, piece of the codebase to check. This was because, in some cases, there may be no record of this code anywhere online, particularly where developers were working directly on production. In cases such as these, each line of each custom module would have to be checked to ensure no nasty surprises lurked within. Luckily for us, the example site had kept backups of their custom code so we were able to diff against those backups and confirm all was well.

The site we were working on was running a Drupal 7.28 codebase so had to be compared to a Drupal 7.28 core. Similarly, each of the modules were outdated, so we needed to ensure we were downloading the correct module version before we ran each check. In cases where a site has a make file to use, then it's simple to create a clone based on that. If the site you want to check does not contain a make file, then all is not lost. You just need a little more hacky drush-fu.

By placing the following in /path/to/codebase/auditdownload.php and running drush scr auditdownload.php will download all enabled modules of the correct version into /tmp:

<?php

mkdir('/tmp/audit/modules', 0777, true);

$x = module_list();

foreach ($x as $y) {

$p = system_get_info('module', $y);

$projects[$p['project']] = $p['version'];

}

foreach ($projects as $project => $version) {

if ($project && $version) {

echo sprintf('Downloading %s version %s', $project, $version) . PHP_EOL;

if ($project === 'drupal') {

system("drush dl --destination=/tmp/audit $project-$version");

}

else {

system("drush dl --destination=/tmp/audit/modules $project-$version");

}

}

}The diff command can then be used to show any discrepancies:

$ diff -rq /tmp/audit/drupal-7.28/ /path/to/codebase

Files /tmp/audit/drupal-7.28/.htaccess and /path/to/codebase/.htaccess differ

$ diff -rq /tmp/audit/modules/ /path/to/codebase/path/to/modules

Only in /path/to/codebase/path/to/modules: old_ckeditor_version

Only in /path/to/codebase/path/to/modules: allmodules.zip

Only in /path/to/codebase/: filesFiles

Where a recent backup of the files directory isn't available, running the following commands will do basic checking to ensure there aren't any PHP files in the directory.

$ find sites/adammalone/files/ -iname "*.php"

$ find sites/adammalone/files/ -type f -exec file {} \; | grep -i PHPOther extensions and filetypes can be checked by altering the command, ie for Windows executables:

$ find sites/adammalone/files/ -iname "*.exe"

$ find sites/adammalone/files/ -type f -exec file {} \; | grep -i executableAdditionally, the .htaccess for files directories should include the following section to remove the attack surface in future by manually specifying the handler.

<Files *>

# Override the handler again if we're run later in the evaluation list. SetHandler Drupal_Security_Do_Not_Remove_See_SA_2013_003

</Files>As an additional step, an antivirus can be run over the files directory to ensure nothing exists there that looks suspicious. ClamAV was used in this instance and is simple to install, download the latest virus definitions and run. I found the following options to be favourable:

clamscan -irz --follow-dir-symlinks=2 --follow-file-symlinks=2At this point, our work was complete and the site was restored.

In summary, running through these checks was a lengthy process that provided me with a far more complete understanding of the available attack surfaces once a Drupal site is compromised. While we were only dealing with small sites, a larger site would have required a lot more effort and produced far more tears.

While no one can predict when vulnerabilities will surface, the fallout (and tears) of these security vulnerabilities can be reduced with solid, regular backups, keeping code in VCS and ensuring servers are hardened. Additionally, in times of security crisis, another obvious alternative to implementing this yourself is to utilise the services of a managed hosting provider, which can provide you support and timely assistance.