Tracing errors in Drupal

I get to see a lot of different Drupal issues in my day to day at Acquia. From relatively simple problems that can be fixed with altered configuration, a cache clear or a little custom code, to almost untraceable bugs with roots deep in the Drupal bootstrap; where those debugging must tread with care. Usually, we exist as the resource consulted with, after the customer is at their wit's end and finds themselves needing us to shed light on what seems like an impossible situation.

In early February, and with the clock ticking on an impending launch, one such customer got in touch with a bug that had stumped everyone on their team. One of the first steps we take when trying to get to the bottom of a bug for a customer is to attempt to replicate the issue. This allows us to start narrowing down and focusing in on the cause in order to head towards the process of finding a fix.

The problem with this particular issue was that replication was both unreliable and fluxional. On occasions, pages would timeout, return partial content and tokens would not be converted correctly. The best clues we had to go on was that these incidents occurred on cache clears and cron runs… sometimes. Attempts to replicate the illusive issues in the customer’s development and staging environments failed with no errors observed. Additionally, replication of the problems was similarly temperamental on a local clone, no matter how many clones were attempted. The overarching symptom, however, appeared to be related to tokens; with the page title remaining the unparsed '[site:name] | [node:title]'.

In addition to the problems above, we were hindered by two additional ancillary issues that got in the way of debugging:

- Pending database updates, each of which could have both caused or exacerbated the problems

- Huge numbers of notices in the log and appearing on execution of actions with drush. This made it hard to determine an obvious error (remember this for later).

Digging deeper

At this point in the debugging cycle, it was desired to create an environment that matched 1:1 with the production environment. Where production utilised dedicated load balancers, many webservers and highly available database servers; the staging and development environments did not. A replica environment was created in order to match exactly and provide the best route towards a successful replication. With more resources pulled in to assist in the debugging effort, and the urgency increasing due to the countdown to launch we were able to echo what was occurring on production to about the same level of reliability; on cache clears and cron runs… sometimes.

The combination of an exact replica environment, with more eyes on the problem, told us that with both cache flush operations and cron runs, certain caches were purged. The idea then, was that incorrect values were getting stored in the cache, after only a partially complete cache set. It was then decided to completely disable the cache on the replica environment. This would remove a variable we couldn't control, as well as follow our instincts to determine why cache wasn’t being set correctly. This was achieved with the following placed in the site's settings.php as directed in caching documentation.

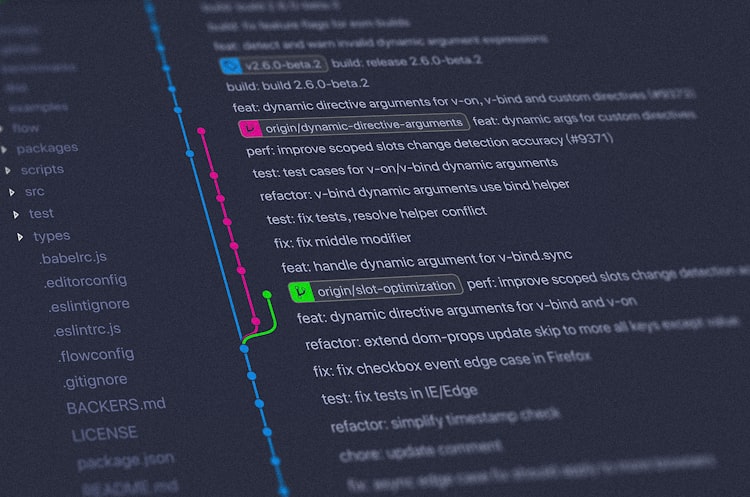

$conf['cache_backends'][] = './includes/cache-install.inc'; $conf['cache_default_class'] = 'DrupalFakeCache';This key step allowed us to reliably replicate all issues on every page load, a gold-mine in debugging terms, which brought us a lot closer to being able to get to the bottom of the issue. The decision was made to run through an entire bootstrap with XDebug to analyze the page load and variables.. This allowed us to observe all variables at every stage of the Drupal bootstrap; a task which paid off with no exceptions here. Next, we started looking from tokens module to core and the operations that happened early on in the bootstrap. Any time I'm debugging in module.inc or Entity API, I keep getting that nagging feeling that I've gone way too deep, then dive in to go deeper. Very much inception tactics of debugging.

Some of the variable enumeration that XDebug aided with showed me that when modules were initially loaded, only half got loaded successfully. One of the unloaded victims was system.module which contains token hooks. These hooks were not being placed in the cache so [page:title] wasn't getting replaced!

The Cause

When Drupal first loads all its modules in _drupal_bootstrap_variables() via module_load_all(), it runs through the list of modules and includes them for later execution. Drupal takes the functions and hooks from each module and stores them in the cache so hooks may be implemented at will later on without having to scan all the files again.

What if there’s some kind of error in the include of a module, when Drupal is running on a freshly cleared cache_bootstrap? Rather than completing the bootstrap, Drupal will divert to its error functions and stop execution of the initial bootstrap. Since Drupal can only get part-way into its bootstrap, it can only cache some of the hooks. This caused a number of modules to not be loaded correctly and as such, their hooks weren’t available later on in the Drupal page load when they were required. Remember how tokens weren’t being replaced in the page title, that was due to some implementations of hook_token_info not being discovered and cached.

On subsequent page loads, since there are already cached items (albeit incomplete items), Drupal will adhere to what it has in its cache. The problem that caused all of this was a simple error in a custom module although it was made far worse by the large number of notices flooding the logs. These hid the one notice that was causing this chain of errors. A single line including a file that didn’t exist with no additional error handling was the root cause of all the problems and commenting out the line fixed absolutely everything.

What we can learn from this?

This just goes to show how a small error can be made far more problematic when the actual issue isn't easily observed due to huge numbers of other errors. An apt saying at this point is that we couldn't see the wood from the trees in terms of notices to know which was pertinent. Another issue was that the file include was not wrapped in any sort of check to determine if the file existed. Nor was it preceded by an '@' which would have suppressed the errors in a similar manner. Additionally, the red herring of ~30 database updates that could not be completed served as a distraction to the ultimate goal of finding this issue. A clean codebase with up-to-date modules and themes spawning no errors would have had this resolved in a fraction of the time.

Defensive coding, here, would have helped but I'm just glad I got to work on another crazy Drupal issue!